Wikidata for data journalism (with R and ‘tidywikidatar’)

Dataharvest 2022

Giorgio Comai

(OBCT/CCI - EDJNet)

22 May 2022

Two big questions

1. how to you get Wikidata to throw at you the data you need

2. how to retrieve Wikidata's data in a way that won't give you headaches

Access Wikidata

Web front-end, nice to read: https://www.wikidata.org/wiki/Q162022

SPARQL: https://www.wikidata.org/wiki/Wikidata:SPARQL_query_service/queries/examples

API version https://www.wikidata.org/w/api.php?action=wbgetentities&ids=Q162022

- machine readable

- nested lists, structure changes depending on values

What's the matter (for R users)?

- R users mostly hate nested lists

- some probably also hate SPARQL, but even more simply don't know much about SPARQL

- if you don't know what to expect, it's a pain to process data

- existing tools are not fit for the iterative data analysis process that is at the core of data journalism

- re-running an analysis with minor changes is a very common part of the workflow... without built-in caching, this can be painfully slow

tidywikidatar

Check out website with documentation and examples: https://edjnet.github.io/tidywikidatar/

- everything in tabular format

- one row, one piece of information

- easy local caching

- easy integration with

dplyrpiped routines - get image credits from WikiMedia commons

- include Wikipedia in the exploration, or use it as a starting point

tidywikidatar

The basics

Enable local caching

library(dplyr, warn.conflicts = FALSE)library("tidywikidatar")tw_enable_cache()tw_set_cache_folder( path = fs::path(fs::path_home_r(), "R", "tw_data"))tw_set_language(language = "en")tw_create_cache_folder(ask = FALSE)Or e.g. MySQL

library(dplyr, warn.conflicts = FALSE)library("tidywikidatar")tw_enable_cache(SQLite = FALSE)tw_set_cache_db(driver = "MySQL", host = "localhost", port = 3306, database = "tidywikidatar", user = "secret_username", pwd = "secret_password")Get an item

tw_search("Mechelen")| id | label | description | |

|---|---|---|---|

| 1 | Q162022 | Mechelen | city in the province of Antwerp, Belgium |

| 2 | Q100020 | Mechelen | town in Gulpen-Wittem, Netherlands |

| 3 | Q21765751 | Mechelen | town in Mechelen municipality, Belgium |

| 4 | Q406287 | Mechelen | Wikimedia disambiguation page |

| 5 | Q3597347 | 13293 Mechelen | asteroid |

Get an item

tw_search("Mechelen") %>% slice(1) %>% tw_get()Get a specific property

tw_search("European Union") %>% slice(1) %>% tw_get_property(p = "P150") %>% # contains administrative territorial entity tw_label()| id | property | value | rank | |

|---|---|---|---|---|

| 1 | European Union | contains administrative territorial entity | Belgium | preferred |

| 2 | European Union | contains administrative territorial entity | Germany | preferred |

| 3 | European Union | contains administrative territorial entity | France | preferred |

| 4 | European Union | contains administrative territorial entity | Italy | preferred |

| 5 | European Union | contains administrative territorial entity | Luxembourg | preferred |

What about qualifiers?

e.g. when did member states join the EU?

https://www.wikidata.org/wiki/Q458#P150

tw_get_qualifiers(id = "Q458", # European Union p = "P150") # contains administrative territorial entity## # A tibble: 37 × 8## id property qualifier_id qualifier_prope… qualifier_value qualifier_value…## <chr> <chr> <chr> <chr> <chr> <chr> ## 1 Q458 P150 Q31 P580 +1958-01-01T00… time ## 2 Q458 P150 Q183 P580 +1958-01-01T00… time ## 3 Q458 P150 Q142 P580 +1958-01-01T00… time ## 4 Q458 P150 Q142 P1012 Q3769 wikibase-entity…## 5 Q458 P150 Q142 P1012 Q17012 wikibase-entity…## 6 Q458 P150 Q142 P1012 Q17054 wikibase-entity…## 7 Q458 P150 Q142 P1012 Q17063 wikibase-entity…## 8 Q458 P150 Q142 P1012 Q17070 wikibase-entity…## 9 Q458 P150 Q142 P1012 Q126125 wikibase-entity…## 10 Q458 P150 Q38 P580 +1958-01-01T00… time ## # … with 27 more rows, and 2 more variables: rank <chr>, set <dbl>What about qualifiers?

tw_get_qualifiers(id = "Q458", # European Union p = "P150") %>% # contains administrative territorial entity filter(qualifier_property == "P580") %>% # keep only "start time" transmute(country = tw_get_label(qualifier_id), start_time = qualifier_value)## # A tibble: 28 × 2## country start_time ## <chr> <chr> ## 1 Belgium +1958-01-01T00:00:00Z## 2 Germany +1958-01-01T00:00:00Z## 3 France +1958-01-01T00:00:00Z## 4 Italy +1958-01-01T00:00:00Z## 5 Luxembourg +1958-01-01T00:00:00Z## 6 Kingdom of the Netherlands +1958-01-01T00:00:00Z## 7 Denmark +1973-01-01T00:00:00Z## 8 Republic of Ireland +1973-01-01T00:00:00Z## 9 Greece +1981-01-01T00:00:00Z## 10 Portugal +1986-01-01T00:00:00Z## # … with 18 more rows- for more, check

tw_get_property_with_details()

Dealing with multiple results when only one is needed

Easy questions can be difficult: in which country is London?

tibble::tibble(city_qid = c("Q84")) %>% dplyr::mutate(city_label = tw_get_label(city_qid), country_qid = tw_get_p(id = city_qid, p = "P17")) %>% tidyr::unnest(country_qid) %>% mutate(country = tw_get_label(country_qid))## # A tibble: 8 × 4## city_qid city_label country_qid country ## <chr> <chr> <chr> <chr> ## 1 Q84 London Q2277 Roman Empire ## 2 Q84 London Q110888 Kingdom of Essex ## 3 Q84 London Q105092 Kingdom of Mercia ## 4 Q84 London Q105313 Kingdom of Wessex ## 5 Q84 London Q179876 Kingdom of England ## 6 Q84 London Q161885 Great Britain ## 7 Q84 London Q174193 United Kingdom of Great Britain and Ireland## 8 Q84 London Q145 United KingdomDealing with multiple results when only one is needed

- keeping first result is tricky

- keeping only preferred may still give more than one result

- people who love tabular data often want just one result, that needs to be "good enough"

tibble::tibble(city_qid = c("Q84", "Q220")) %>% dplyr::mutate(city_label = tw_get_label(city_qid), country_qid = tw_get_p(id = city_qid, p = "P17", preferred = TRUE, # latest_start_time = TRUE, only_first = TRUE)) %>% dplyr::mutate(country_label = tw_get_label(country_qid))## # A tibble: 2 × 4## city_qid city_label country_qid country_label ## <chr> <chr> <chr> <chr> ## 1 Q84 London Q145 United Kingdom## 2 Q220 Rome Q38 ItalyLess typing

tw_qid_meps %>% head() %>% mutate(name = tw_get_label(id), pob = tw_get_p1(id = id, p = "P19")) %>% mutate(pob_label = tw_get_label(id = pob), pob_coordinates = tw_get_p1(id = pob, p = "P625"))## # A tibble: 6 × 5## id name pob pob_label pob_coordinates## <chr> <chr> <chr> <chr> <chr> ## 1 Q157 François Hollande Q30974 Rouen 49.44305555555…## 2 Q329 Nicolas Sarkozy Q90 Paris 48.85694444444…## 3 Q1220 Giorgio Napolitano Q2634 Naples 40.83333333333…## 4 Q1275 Gladwyn Jebb Q163 Yorkshire 53.95833333333…## 5 Q2105 Jacques Chirac Q238723 5th arrondissement of Paris 48.84722222222…## 6 Q2512 Kurt Georg Kiesinger Q7019 Albstadt 48.21194444444…More properties, all at once

tw_qid_meps %>% head() %>% tw_get_p_wide(p = c("P21", "P27", "P569"), property_label_as_column_name = FALSE, label = FALSE, only_first = TRUE)## # A tibble: 6 × 4## id P21 P27 P569 ## <chr> <chr> <chr> <chr> ## 1 Q157 Q6581097 Q142 +1954-08-12T00:00:00Z## 2 Q329 Q6581097 Q142 +1955-01-28T00:00:00Z## 3 Q1220 Q6581097 Q38 +1925-06-29T00:00:00Z## 4 Q1275 Q6581097 Q145 +1900-04-25T00:00:00Z## 5 Q2105 Q6581097 Q142 +1932-11-29T00:00:00Z## 6 Q2512 Q6581097 Q183 +1904-04-06T00:00:00Ztw_qid_meps %>% head() %>% tw_get_p_wide(p = c("P21", "P27", "P569"), property_label_as_column_name = TRUE, label = TRUE, only_first = TRUE)## # A tibble: 6 × 5## id label sex_or_gender country_of_citizenship date_of_birth ## <chr> <chr> <chr> <chr> <chr> ## 1 Q157 François Hollande male France +1954-08-12T0…## 2 Q329 Nicolas Sarkozy male France +1955-01-28T0…## 3 Q1220 Giorgio Napolitano male Italy +1925-06-29T0…## 4 Q1275 Gladwyn Jebb male United Kingdom +1900-04-25T0…## 5 Q2105 Jacques Chirac male France +1932-11-29T0…## 6 Q2512 Kurt Georg Kiesinger male Germany +1904-04-06T0…Different entry points

Search

tw_search()- search strings

Query

tw_query()- simple queries based on property/value couplestw_get_all_with_p()- get all items that have a given property, irrespective of their value

Based on Wikipedia

tw_get_wikipedia_page_links()- Get all Wikidata Q identifiers of all Wikipedia pages linked to inputtw_get_wikipedia_page_section_links()- All identifiers found in a specific section of a Wikipedia page

An example starting from Wikipedia

Election of the President of the Republic in Italy

- Election of the President of the Republic in Italy

- the electoral college can vote literally for whoever they like

- the list ends up including very different candidates, from respected intellectuals to football players and porn actors

- almost all of them with one thing in common: they are on Wikidata, but Wikidata does not know they have something in common.

Wikidata identifiers

Take a single section:

df <- tw_get_wikipedia_page_section_links( title = "Elezione del Presidente della Repubblica Italiana del 2022", section_title = "IV scrutinio", language = "it")df %>% select(wikipedia_title, qid)| wikipedia_title | qid |

|---|---|

| Adnkronos | Q359875 |

| Alberto Airola | Q14636378 |

| Aldo Giannuli | Q3609233 |

| Alessandro Altobelli | Q346945 |

| Alessandro Barbero | Q960451 |

| Carlo Nordio | Q19357364 |

| Dino Zoff | Q180661 |

| Domenico De Masi | Q3713005 |

Find out more

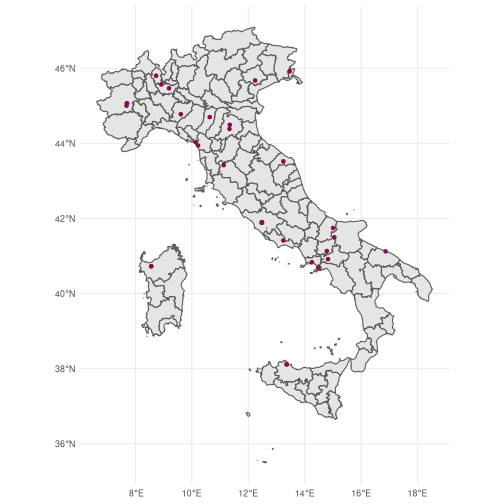

pob_df <- df %>% select(qid) %>% mutate(name = tw_get_label(qid)) %>% mutate(place_of_birth_id = tw_get_p(id = qid, p = "P19",only_first = TRUE)) %>% mutate(place_of_birth = tw_get_label(place_of_birth_id)) %>% mutate(place_of_birth_coordinates = tw_get_p(id = place_of_birth_id, p = "P625", only_first = TRUE))pob_df## # A tibble: 36 × 5## qid name place_of_birth_… place_of_birth place_of_birth_…## <chr> <chr> <chr> <chr> <chr> ## 1 Q359875 Adnkronos <NA> <NA> <NA> ## 2 Q14636378 Alberto Airola Q9474 Moncalieri 45,7.683333 ## 3 Q3609233 Aldo Giannuli Q3519 Bari 41.125277777778…## 4 Q346945 Alessandro Altobe… Q128211 Sonnino 41.414458333333…## 5 Q960451 Alessandro Barbero Q495 Turin 45.066666666667…## 6 Q19357364 Carlo Nordio Q5475 Treviso 45.672219444444…## 7 Q180661 Dino Zoff Q53131 Mariano del F… 45.916666666667…## 8 Q3713005 Domenico de Masi Q277969 Rotello 41.7475,15.0041…## 9 Q3723207 Elisabetta Belloni Q220 Rome 41.893055555556…## 10 Q726247 Franco Grillini Q94979 Pianoro 44.383333333333…## # … with 26 more rowsHere they are on a map

pob_sf <- pob_df %>% tidyr::separate( col = place_of_birth_coordinates, into = c("pob_latitude","pob_longitude"), sep = ",", remove = TRUE, convert = TRUE) %>% filter(is.na(pob_latitude)==FALSE) %>% sf::st_as_sf(coords = c("pob_longitude", "pob_latitude"), crs = 4326)library("ggplot2")pop_gg <- ggplot() + geom_sf(data = ll_get_nuts_it(level = 3, no_check_certificate = TRUE)) + geom_sf(data = pob_sf, colour = "deeppink4") + theme_minimal()## ℹ Source: https://www.istat.it/it/archivio/222527## ℹ Istat (CC-BY)

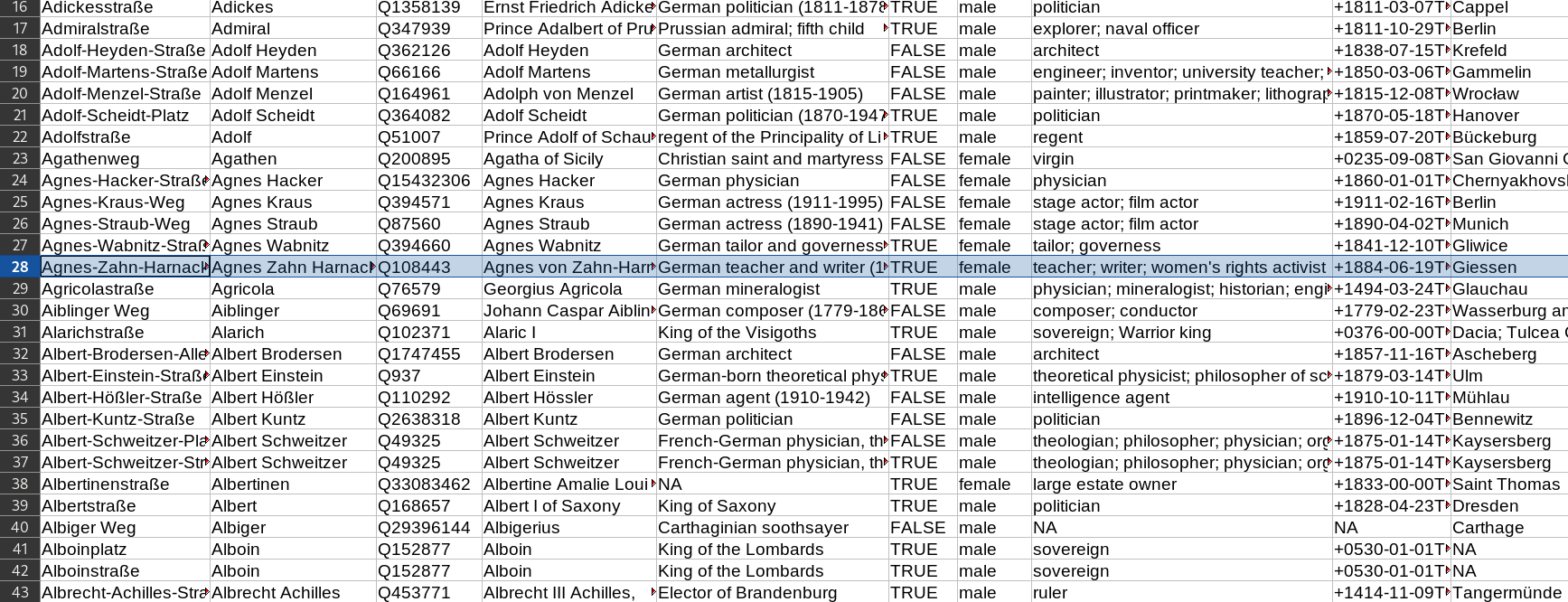

All the usual things we expect from Wikidata

occupation_df <- df %>% pull(qid) %>% tw_get_property(p = "P31") %>% # get "instance of" filter(value == "Q5") %>% # keep only humans tw_get_property(p = "P106") %>% # get occupation # filter(value!="Q82955") %>% # exclude politicians group_by(value) %>% count(sort = TRUE) %>% ungroup() %>% transmute(occupation = tw_get_label(value), n)| occupation | n |

|---|---|

| politician | 71 |

| university teacher | 19 |

| judge | 16 |

| lawyer | 14 |

| jurist | 10 |

| deputy chairperson | 9 |

| basketball player | 9 |

| physician | 9 |

| clerk | 9 |

| journalist | 7 |

| sociologist | 5 |

| association football player | 5 |

| economist | 4 |

| psychologist | 4 |

| academic | 4 |

| banker | 4 |

| association football manager | 3 |

| historian | 2 |

| writer | 2 |

| high civil servant | 1 |

| essayist | 1 |

| music director | 1 |

| professor | 1 |

| entrepreneur | 1 |

| media proprietor | 1 |

| conductor | 1 |

| diplomat | 1 |

| film director | 1 |

| sports executive | 1 |

| radio personality | 1 |

| medievalist | 1 |

| theatrical director | 1 |

| film critic | 1 |

| magistrate | 1 |

| musician | 1 |

| television presenter | 1 |

And other things useful for data visualisation and interactive interfaces, e.g. quick access to images...

president_df <- tw_search("Sergio Mattarella") %>% tw_filter_first(p = "P31", q = "Q5") president_df %>% tw_get_image()## # A tibble: 3 × 2## id image ## <chr> <chr> ## 1 Q3956186 Sergio Mattarella Presidente della Repubblica Italiana.jpg## 2 Q3956186 Sergio Mattarella Presidente della Repubblica Italiana.jpg## 3 Q3956186 Sergio Mattarella Presidente della Repubblica Italiana.jpgpresident_df %>% tw_get_image(format = "embed", width = 300) %>% pull(image)## [1] "https://commons.wikimedia.org/w/index.php?title=Special:Redirect/file/Sergio Mattarella Presidente della Repubblica Italiana.jpg&width=300"## [2] "https://commons.wikimedia.org/w/index.php?title=Special:Redirect/file/Sergio Mattarella Presidente della Repubblica Italiana.jpg&width=300"## [3] "https://commons.wikimedia.org/w/index.php?title=Special:Redirect/file/Sergio Mattarella Presidente della Repubblica Italiana.jpg&width=300"...with metadata and credits line

president_df %>% tw_get_image_metadata() %>% tidyr::pivot_longer(cols = -1, names_to = "property", values_transform = as.character)## # A tibble: 18 × 3## id property value ## <chr> <chr> <chr> ## 1 Q3956186 image_filename "Sergio Mattarella Presidente della Repu…## 2 Q3956186 object_name "Sergio Mattarella Presidente della Repu…## 3 Q3956186 image_description "Official picture of the <a href=\"https…## 4 Q3956186 categories "Attribution only license|Images from th…## 5 Q3956186 assessments "" ## 6 Q3956186 credit "<a rel=\"nofollow\" class=\"external te…## 7 Q3956186 artist "Unknown author<span style=\"display: no…## 8 Q3956186 permission <NA> ## 9 Q3956186 license_short_name "Attribution" ## 10 Q3956186 license_url <NA> ## 11 Q3956186 license <NA> ## 12 Q3956186 usage_terms <NA> ## 13 Q3956186 attribution_required <NA> ## 14 Q3956186 copyrighted <NA> ## 15 Q3956186 restrictions "personality" ## 16 Q3956186 date_time "2022-05-14 10:47:24" ## 17 Q3956186 date_time_original "2022-01-29" ## 18 Q3956186 commons_metadata_extension "1.2"Back and forth between Wikidata and Wikipedia

This gets the Q identifier of all pages linked from a the Wikipedia page of a given Q identifier. Easy peasy :-)

president_df %>% tw_get_wikipedia(language = "it") %>% # gets url of Wikipedia page from QID tw_get_wikipedia_page_links(language = "it") %>% select(wikipedia_title, qid)## # A tibble: 491 × 2## wikipedia_title qid ## <chr> <chr>## 1 Fabio Vander <NA> ## 2 Ordine per Meriti Eccezionali <NA> ## 3 Discussioni template:Capi di Stato d'Europa <NA> ## 4 1941 Q5231## 5 1987 Q2429## 6 1989 Q2425## 7 1990 Q2064## 8 1998 Q2089## 9 1999 Q2091## 10 2001 Q1988## # … with 481 more rowsStarting from strings

Integration with interactive interfaces

A couple of examples of practical use cases

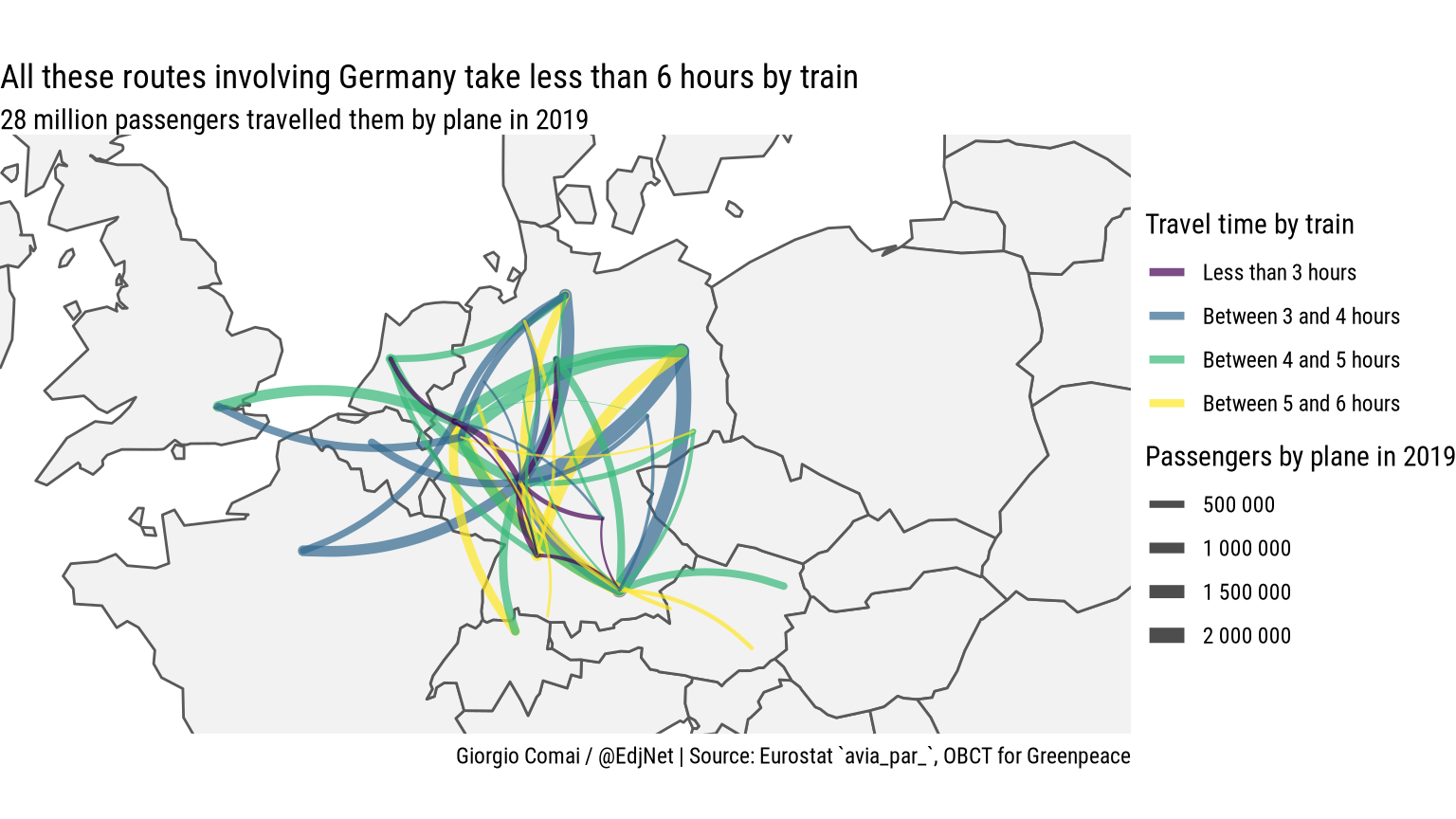

Main air routes that could be travelled by train

Wikidata used for: defining city hubs for airports, getting coordinates of airports (for excluding those on islands), use unique identifiers for merging with train dataset https://edjnet.github.io/european_routes/

Mapping diversity

Issues and next steps

General issues

- if you are processing many thousands of items, the current approach can be very slow when run for the first time (near instant thanks to caching later)

- no obvious long term solution, but a future version will allow for an easier way to share the cache to make sure others can also run the script instantly

- no easy way to "give back" to Wikidata

tidywikidatar

Check out website with documentation and examples: https://edjnet.github.io/tidywikidatar/

- everything in tabular format

- one row, one piece of information

- easy local caching

- easy integration with

dplyrpiped routines - get image credits from WikiMedia commons

- include Wikipedia in the exploration, or use it as a starting point